by Bob Shively, Enerdynamics President and Instructor

Wind power is big business in many states and is only growing bigger as market demand for renewable power grows. According to the American Wind Energy Association:

“The first quarter of 2011 saw over 1,100 megawatts (MW) of wind power capacity installed – more than double the capacity installed in the first quarter of 2010. The U.S. wind industry had 40,181 MW of wind power capacity installed at the end of 2010, with 5,116 MW installed in 2010 alone. The U.S. wind industry has added over 35% of all new generating capacity over the past 4 years, second only to natural gas, and more than nuclear and coal combined.”

(source: http://www.awea.org/learnabout/industry_stats/index.cfm )

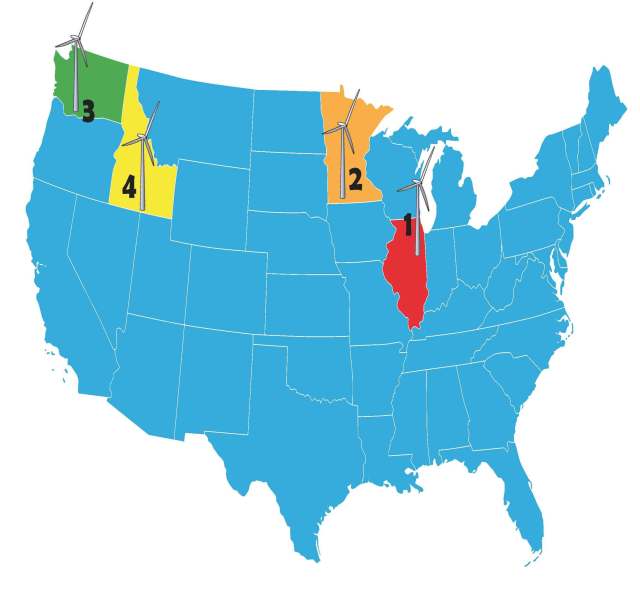

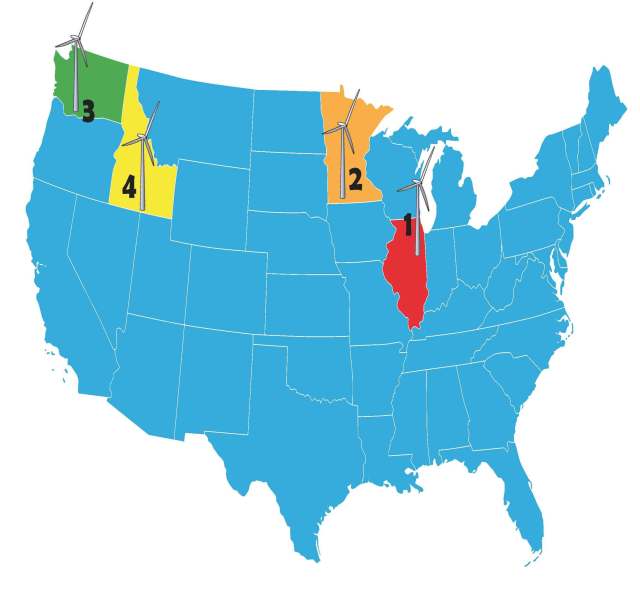

So who is building all these projects and where are they being built? While Texas, Iowa, and California currently have the most installed wind capacity in the U.S., wind generation capacity grew the most in the first quarter of 2011 in Minnesota, Illinois, Washington, Idaho and Nebraska.

The biggest projects installed in the first quarter of 2011 include:

1 – Big Sky Wind Facility: Sited in the Midwest in the northern Illinois counties of Bureau and Lee, the Big Sky Wind Facility was the country’s largest project installation of Q1 2011 in terms of nameplate rating (source: American Wind Energy Association, 1st Quarter 2011 Market Report). Developed by Chicago-based Midwest Wind Energy and owned by Edison Mission Group, the Big Sky project’s capacity is 239.4 MW and comprises 114 Suzlon-manufactured turbines spanning 13,000 acres of elevated farmland. Each turbine is capable of producing approximately 2.1 megawatts of wind power.

Additionally, an 18-mile, 138kV transmission line developed and permitted by Midwest Wind Energy connects the Big Sky project to the transmission network. Wind resources in northern Illinois boast the unique advantage of close proximity to Chicago’s huge

electric load. This means less transmission investment is required to bring wind-generated power to the electric grid. According to the Illinois Wind Energy Association, “parts of Northern Illinois are on the PJM electric grid, a regional transmission organization serving a market of over 50 million people in 13 states. Illinois has the strongest winds in the PJM market, making Illinois projects very attractive to utilities in several states.” Big Sky Wind plans to sell into the PJM marketplace as a merchant generator.

2 – Bent Tree Wind Farm: Southern Minnesota’s strong and consistent prairie winds feed the Bent Tree Wind Farm, which began Phase One of its commercial operation in February 2011 to supplement the power needs of those in neighboring Wisconsin. Bent Tree’s Phase One nameplate rating is 201.3 MW – enough to power about 50,000 homes. If and when subsequent phases are completed, the entire farm will have the potential to deliver 400 MW of wind power.

Sited near the town of Albert Lea (100 miles due south of Minneapolis), Bent Tree

spans 32,500 acres developed by Wind Capital Group and Alliant Energy, though the project is owned and operated by Alliant Energy’s subsidiary Wisconsin Power and Light Company (WPL). Each of its current 122 wind turbines was manufactured by Vestas and boasts a 1.65 MW turbine rating. According to AlliantEnergy.com: “The addition of Bent Tree allows WPL to meet and exceed Wisconsin’s existing Renewable Portfolio Standard. … A decision on the remaining 200 MW of wind capacity will likely be driven by Renewable Portfolio Standards.” Given this statement, we can conclude that this project is selling its power to WPL through bilateral agreements so that WPL can meet its state Renewable Portfolio Standard (RPS) requirements.

3 – Juniper Canyon: South central Washington’s Klickitat County is home to the

Juniper Canyon Wind Farm, a 250 MW, two-phase energy project located on roughly 28,000 acres of undulating terrain between the towns of Bickleton and Roosevelt. The first phase of the project (Juniper Canyon I) was installed in Q1 2011 and comprises 63 2.4-MW turbines manufactured by Mitsubishi. This brings Juniper Canyon I’s total capacity to 151.2 MW. When complete, the Pacific Wind Development project will include up to 128 turbines and a total proposed generating capacity of up to 250 MW – enough to provide renewable energy to 75,000 area homes.

Pacific Wind Development is a wholly owned subsidiary of Iberdrola Renewables, Inc., which independently owns and operates Juniper Canyon I (and II, when the time comes). Additionally, Iberdrola is the independent builder, owner and operator of a transmission line to the Bonneville Power Administration’s (BPA) interconnection point. BPA is purchasing the power generated by Juniper Canyon I and transmitting it to BPA’s Rock Creek substation via 230kV transmission lines.

4 – Idaho Wind Partners: Idaho Wind Partners, a collaboration among GE Energy Financial Services, Exergy Development Group, Atlantic Power Corp. and Reunion Power, began operation on its $500 million, 11-site wind project earlier this year. The portfolio of wind farms is located in southern Idaho and has the capacity to deliver 183 MW of wind power using GE Energy-made turbines, each with a 1.5 MW rating (source: http://www.nawindpower.com/e107_plugins/content/content.php?content.7296). Eight of the wind farms are located in Hagerman, Idaho, while three others are 70 miles away in Burley. Despite the distance, all operate on a unified system and all deliver power to Idaho Power Co. as part of a 20-year agreement.

On one hand, they are symbiotic: Variable renewable resources need flexible generation resources to pick up load when the wind doesn’t blow or the sun doesn’t shine. And other than limited hydro resources, gas generation is best suited to provide the flexible generation needed. But the renewable industry realizes that cheap gas generation makes it harder for renewables to compete on price.

On one hand, they are symbiotic: Variable renewable resources need flexible generation resources to pick up load when the wind doesn’t blow or the sun doesn’t shine. And other than limited hydro resources, gas generation is best suited to provide the flexible generation needed. But the renewable industry realizes that cheap gas generation makes it harder for renewables to compete on price.

At a recent

At a recent