by Bob Shively, Enerdynamics’ President and Lead Instructor

The U.S. electric power industry was born in 1882 when Thomas Edison’s Edison Electric Illuminating Company opened a central generating station at Manhattan’s Pearl Street. Soon thereafter a number of small distribution systems were created, and, by the early 1900s, electric utilities became widespread in cities.

The steam-powered reciprocating engine, which spun an electric generator, served as the original technology for Edison’s power generation. Initial utilities used this technology plus hydropower to grow their systems. But the reciprocating engines, which converted up-and down motion to the rotary motion required by generators, proved to be noisy, bulky and hard to maintain.

Meanwhile in England, Charles Parsons invented the steam turbine, which directly produced rotary motion as steam passed through vanes on a long shaft:

(Click on image above or click here to see a short video of how a steam turbine works)

(Click on image above or click here to see a short video of how a steam turbine works)

The steam turbine proved to be smaller in size, mechanically simpler, quieter than reciprocating engines, and it could be scaled up to produce large amounts of electricity. As steam turbines were implemented in the early 1900s, costs of power plunged and society became dependent upon electricity.

Into the 1990s, the steam turbine remained the favored technology for utility power plants. And it proved versatile as it could be fueled by coal, natural gas and later on uranium in nuclear power plants. Even today, more than 70% of U.S. electricity is generated using steam turbines.

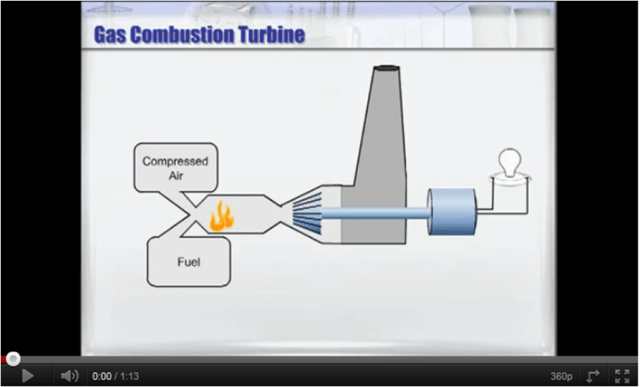

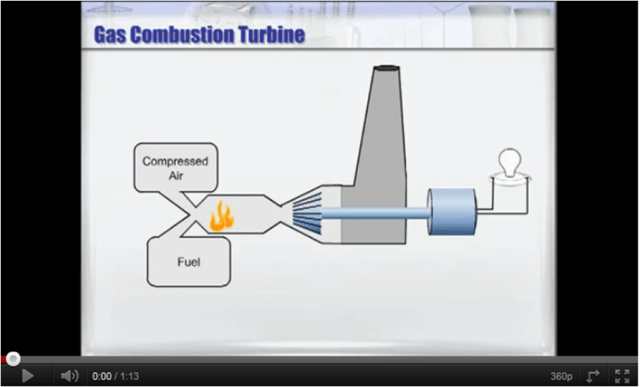

The steam turbine in recent years has lost much of its market share to a new technology – the gas turbine. A gas turbine uses ignited compressed air and fuel, resulting in gasses expanded directly through a turbine:

(Click on image above or click here to see a short video of how a gas combustion turbine works)

First used for electric generation in 1939 in Switzerland, gas turbines gained the notice of the utility industry when a gas turbine power plant on Long Island provided power to recover from the infamous New York blackout of 1965. Initially gas turbines were used mostly for peaking purposes, but another innovation lead to more widespread use: using a gas turbine as the primary source of power, followed by using the turbine’s waste heat to create steam for use in a steam turbine.

This technology, called the combined-cycle gas turbine, became the new favored source of power in the mid-1990s:

(Click on image above or click here to see a short video of how a combine-cycle gas turbine works)

Its benefits included operating flexibility, low up-front capital costs, and reduced environmental impacts relative to steam turbines powered by coal. Today, the majority of new power plant installations in the U.S. utilize some form of gas turbine technology.

So for the first 100 years of the power industry, the steam turbine dominated. In the last 20 years, the gas turbine has taken the lead in new installations. But in today’s world of rapid advancement, could yet another technology or technologies be on the cusp of supremacy?

The U.S. Energy Information Administration (EIA) data for new power plants for the first six months of 2011 indicates that 51% of new plants used gas turbines or combined-cycle turbines, 24% used steam turbines and 24% used wind turbines. Indeed it appears that wind will become an important source of power. But there’s another renewable technology worth keeping our eyes on — the photovoltaic cell (PV):

(Click on image above or click here to see a short video of how PV cells work)

(Click on image above or click here to see a short video of how PV cells work)

While the PV currently is a very small part of new installations, falling costs could rapidly change this picture.

As discussed in our recent blog “Can Solar Power on Our Rooftops Compete with Existing Generation on Price?” there is the distinct possibility that PVs could compete with coal-fired steam turbines directly on price within the next decade. If so, expect to see a third revolution in generation technology.

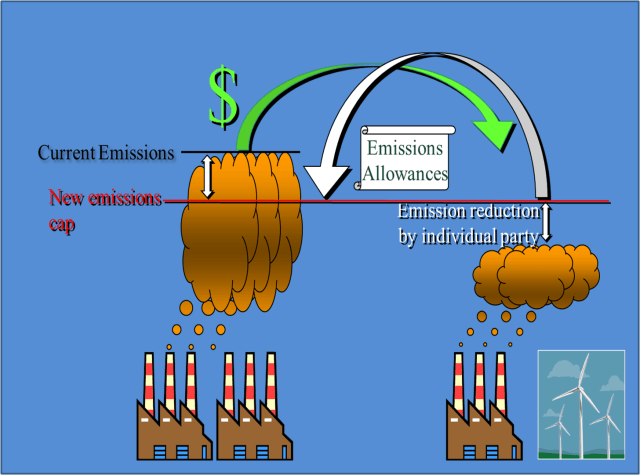

power plant emissions in 2012 and the dramatic effect they may have on the energy business. On December 30, 2011, just two days before the new rules were to go into effect, the The United States Court of Appeals for the D.C. Circuit issued its ruling to stay the CSAPR pending judicial review (see http://www.epa.gov/crossstaterule/

power plant emissions in 2012 and the dramatic effect they may have on the energy business. On December 30, 2011, just two days before the new rules were to go into effect, the The United States Court of Appeals for the D.C. Circuit issued its ruling to stay the CSAPR pending judicial review (see http://www.epa.gov/crossstaterule/